Empowering Enterprises with GenAI Solutions in Hong Kong or Worldwide

What is GenAI?

Generative AI, or GenAI, is a branch of artificial intelligence that focuses on creating new content, including text, images, audio, and video. By learning patterns from vast datasets, GenAI models can generate innovative and diverse content that closely resembles human-created work. Unlike traditional AI systems designed for specific tasks like classification or prediction, GenAI excels in producing novel and contextually relevant content. Cutting-edge GenAI technologies include advanced language models like GPT-4o, Llama 3, Mistral, and Gemini Ultra, which can compose articles and academic papers, as well as powerful visual generators such as Stable Diffusion, DALL-E, and Sora, capable of creating realistic images and short videos from simple text prompts.

In short, GenAI is a groundbreaking AI technology that creates original content across various formats by learning from massive datasets, with advanced models like GPT-4o and Stable Diffusion generating human-like text, images, and videos from simple prompts.

How enterprises are Using GenAI in Hong Kong

As GenAI continues to transform industries, enterprises in Hong Kong are quickly adopting these technologies to enhance operations, improve customer experiences, and drive data-driven decision-making. Common applications of GenAI in Hong Kong include:

AI-Powered Chatbots and Virtual Assistants: Companies are deploying GenAI chatbots to provide round-the-clock customer support, handle inquiries, and deliver personalized interactions across multiple channels.

Intelligent Content Creation: Businesses are harnessing the power of GenAI to generate high-quality marketing content, product descriptions, images, and social media posts, saving time and resources while maintaining a consistent brand voice.

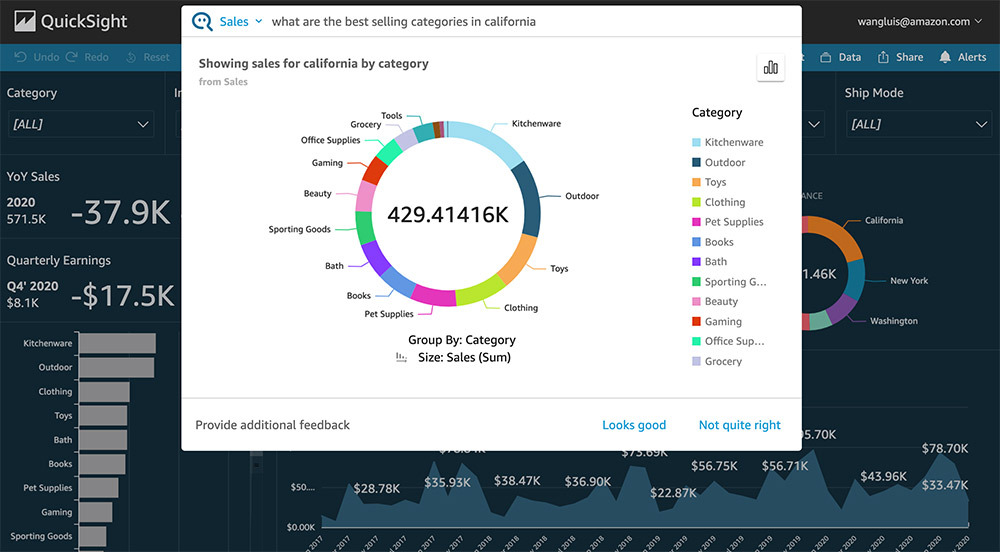

Text to SQL/text to charts: Enterprises are increasingly leveraging GenAI to bridge the gap between natural language and data analytics. By using text-to-SQL technologies, users can query databases using plain language, transforming complex data retrieval into a more intuitive process. This enables employees without extensive technical knowledge to extract valuable insights from large datasets quickly. Additionally, text-to-charts tools are empowering users to generate visual representations of data through simple text commands, facilitating a deeper understanding of data trends and aiding in more informed decision-making. This democratization of data access is enhancing productivity and fostering a more data-driven culture within organizations.

What is the Difference Between GenAI and LLM?

While GenAI encompasses a wide range of AI systems that generate new content, Large Language Models (LLMs) are a specific subset focused on processing and producing human language. LLMs like GPT-4o are trained on extensive text datasets to generate coherent and contextually relevant language, making them essential for various natural language tasks, such as translation, summarization, and question-answering. Although LLMs are crucial components of many GenAI applications, GenAI also includes other generative models that create images, videos, and other media.

What are the Limitations of GenAI?

Despite their immense potential, GenAI technologies come with significant challenges. One primary concern is the risk of perpetuating biases and misinformation present in training data, which can lead to inaccurate or harmful outputs. Effective prompt engineering and human oversight are essential to maintain quality and ensure outputs align with specific goals.

Ethical issues, such as the potential misuse of GenAI for creating deceptive content or impersonating individuals, also require careful consideration and proactive measures.

Prompt injection, the process of overriding original instructions in the prompt with special user input can cause reputational loss and harmful output.

Additionally, GenAI cannot replace traditional machine learning tasks such as classification, regression, and clustering, which are essential for certain structured data analysis and predictive modelling.

What are some pain points companies have when implementing GenAI solutions?

Enterprises often face several pain points when implementing GenAI solutions, including:

- Lack of in-house expertise and knowledge to develop and deploy GenAI technologies effectively

- Unsure on best practice on how to control LLMs with guardrails and other techniques

- Lack of understanding of the state of the art GenAI techniques to get the most out of LLMs using the least cost

- Complex integration challenges when incorporating GenAI into existing systems and workflows

- Difficulties in ensuring data quality of chunking of PDF and other files

- Lack of understanding of the different LLMs and when to use open source compared to closed source

- Lack of understanding of the entire infrastructure of a GenAI platform on cloud or on premise

- Confused on when to use GenAI compared to traditional machine learning models and how to connect them

- Navigating ethical concerns, such as bias, privacy, and responsible use, to maintain trust and compliance

- Challenges in quantifying the return on investment (ROI) for GenAI projects, making it harder to justify costs and resources

How can we solve these pain points?

ThinkCol is a bespoke customized solution firm that helps enterprises deploy AI and GenAI solutions. Since 2016 we have been building machine learning solutions and in 2023 we started deploying GenAI Solutions. Recently we have been focusing on deploying GenAI Platforms for clients to solve pain points such as above.

ThinkCol Enterprise GenAI Platform is designed to leverage the latest advancements in generative AI to transform business operations, streamline workflows, drive data-driven decision-making, and solve the pain points mentioned above. The platform integrates robust features to support enterprises in adopting and scaling GenAI solutions effectively.

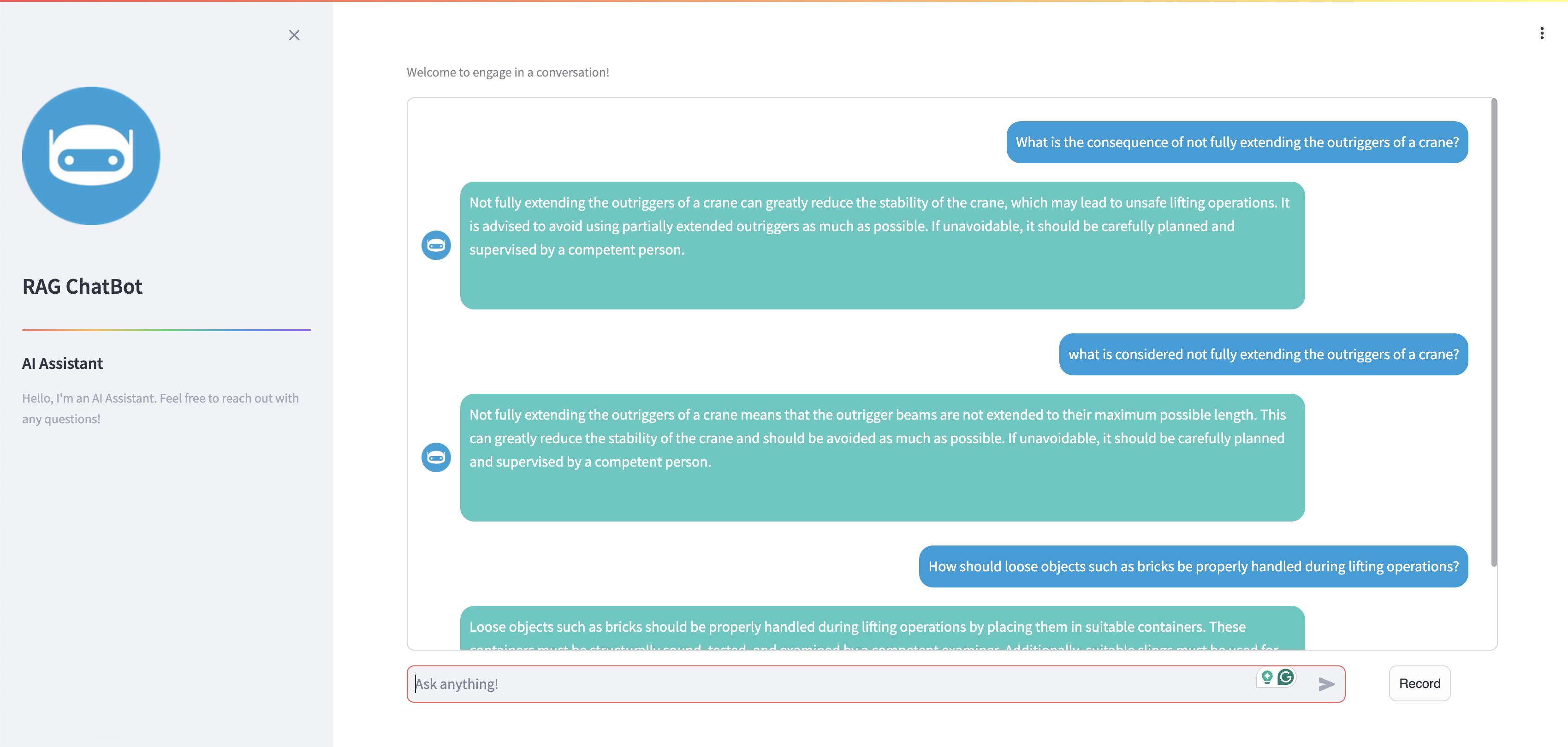

ThinkCol’s Enterprise GenAI Platform empowers organizations with greater control over their RAG (Retrieval-Augmented Generation) chatbots. Currently, most enterprise is stuck at a PoC stage. However we firmly believe that they need to develop their GenAI Platform to move PoC sto production.

With features like RAG chatbots, automatic deployment, knowledge base management, version control, chatbot tuning, and chatbot monitoring, our solution ensures that chatbots remain accurate, reliable, and aligned with organizational goals.

This section will cover the platform's functionalities, including data processing techniques, retrieval methods, chatbot orchestration, and MLOps monitoring, providing a holistic view of how our platform enhances chatbot performance and data-driven decision-making.

Platform Functionality - RAG (Retrieval-Augmented Generation)

Guardrails: Our platform implements robust and customizable safeguards to ensure responsible AI-powered chatbot capabilities. These guardrails include:

- Content filtering

- Blacklisted questions

- Prompt shields to prevent harmful or irrelevant responses.

Additionally, our guardrails can block out specific questions and topics that organizations don't want users to ask, ensuring the chatbot remains focused and aligned with approved subjects. Users can customize a set of blacklisted questions based on topics, keywords, and phrases to further refine the filtering process.

Data Processing: High-quality data input is crucial for accurate chatbot responses. Our platform employs advanced data processing techniques to ensure this.

- Chunking Technique: We use fixed-size and context-aware chunking strategies to break down data into manageable pieces, optimizing processing and analysis.

- Data CleansingThe platform cleanses data to remove irrelevant or redundant information, ensuring only high-quality data is used.

- Key Info Extraction: Advanced algorithms extract key information from documents, ensuring the chatbot can access and use this data effectively.

Knowledge Base Management: The platform includes a Document Management System (DMS) for storing processed data.

- Create/Delete Index: Users can create and delete indexes in the knowledge base, allowing for efficient data organization and retrieval.

- Create/Delete Knowledge: The platform allows users to manage knowledge entries, ensuring the knowledge base remains up-to-date and relevant.

Fine-Tune Chatbot: Users can fine-tune the chatbot to align with specific requirements and improve performance.

Configure Chatbot: The platform provides tools to configure the chatbot's behavior, identity, and mission, ensuring it aligns with organizational goals.

- Behavior: Define how the chatbot should manage conversations and respond to queries.

- Identity: Configure the chatbot’s persona and style of interaction.

- Mission: Align the chatbot’s objectives with organizational goals.

Data Retrieval Techniques: Our platform employs various advanced techniques to retrieve the most relevant data.

- Semantic Search: Uses vector cosine similarity to understand and retrieve data based on meaning.

- Keyword Search: Utilizes traditional keyword-based search methods for straightforward data retrieval.

- Hybrid Search: Combines semantic and keyword search methods for more accurate results.

- Fusion of Multi-Index Retrieval: Combines multiple document representations to ensure comprehensive data retrieval.

LLMops MonitoringThe platform includes robust monitoring and alerting mechanisms to ensure optimal performance and reliability. We will provide the monitoring in the form of a dashboard and will include the following:

- Initial Test Cases

- Feedback System

- Validation System

- Alert on Chatbot Performance Change

- Alert on Document Version Change

- Performance Monitoring (Alert): Monitors chatbot performance and sets alerts for irregularities.

- Validation: Ensures the accuracy, relevancy, and correctness of generated answers.

- Auto Deploy: Facilitates automated deployment of updates and changes to the chatbot.

By integrating these functionalities, ThinkCol's GenAI Platform ensures that organizations can create, manage, and optimize their RAG chatbots effectively, enhancing data-driven decision-making and productivity.

How can Thinkcol Help?

ThinkCol also has done a lot of other GenAI use cases in various industries. As a pioneer in adopting GenAI technologies in Hong Kong, ThinkCol applies cutting-edge AI solutions across various sectors, including retail, property, finance, government, healthcare, supply chain, education, and broadcasting. Our expertise in leveraging Large Language Models (LLMs) showcases our ability to combine advanced AI tools with practical, real-world applications.

For example, for a retail company, we have employed LLMs to enhance internal document search, making sales and staff better understand store performances. Our solution involves automatically generating a summary of the day for all stores in which the staff (e.g. store manager) will be able to further dig deep into the data by chatting with the LLM. While leveraging the company’s datasets, our solution offers extensive support in Cantonese, Traditional Chinese, and English to cater to different language needs through training open-source LLMs.

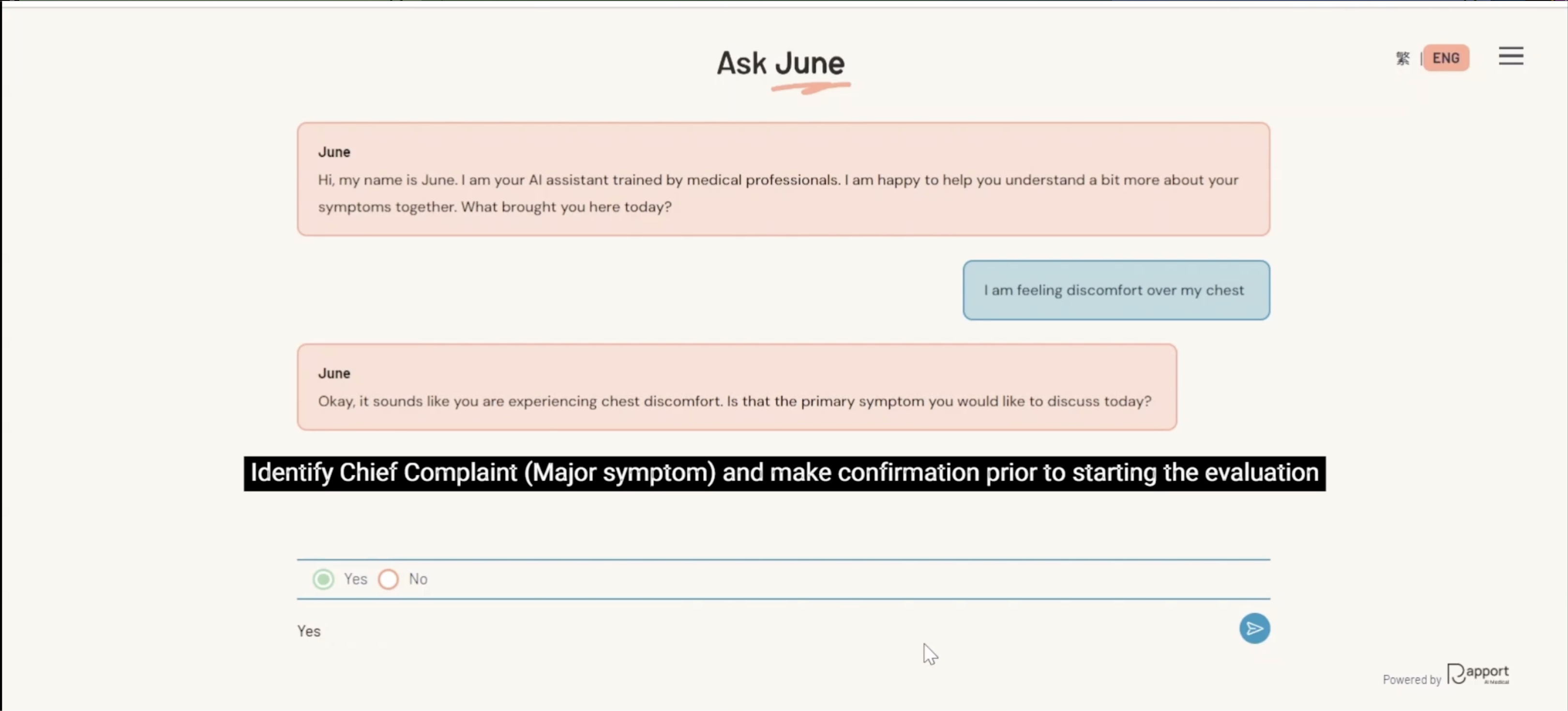

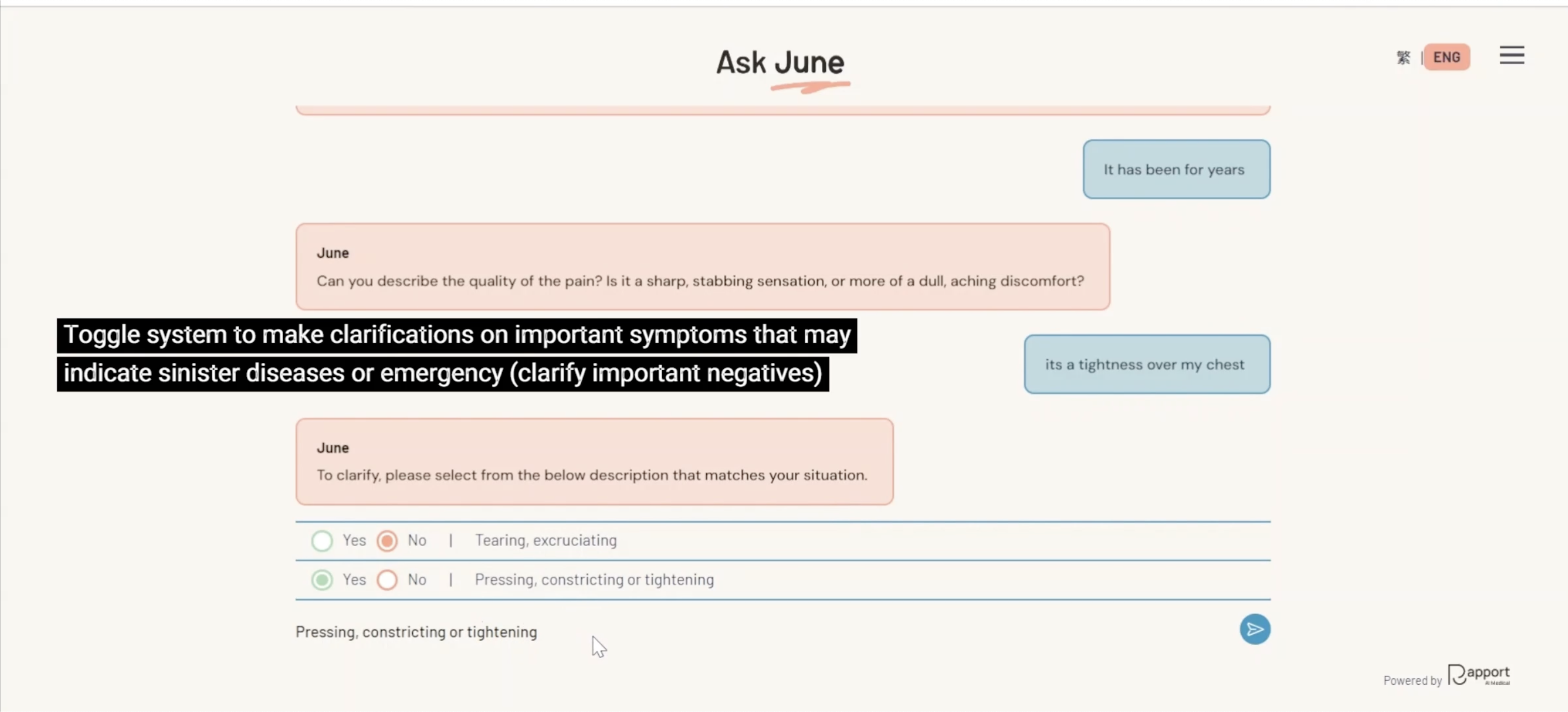

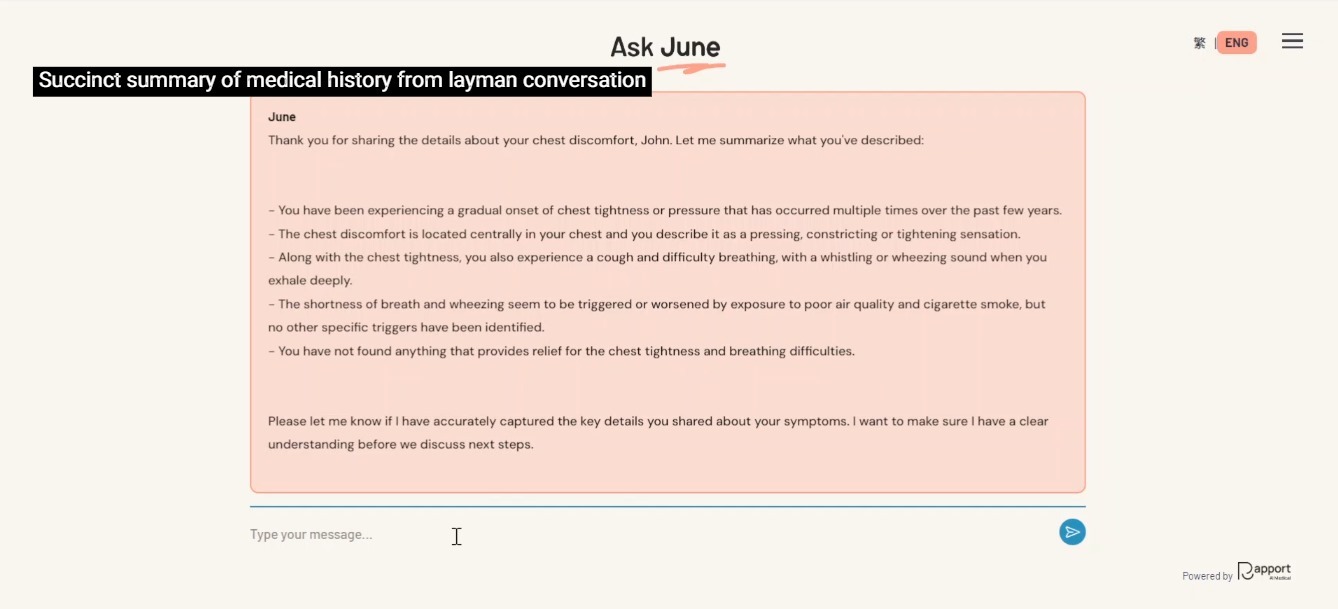

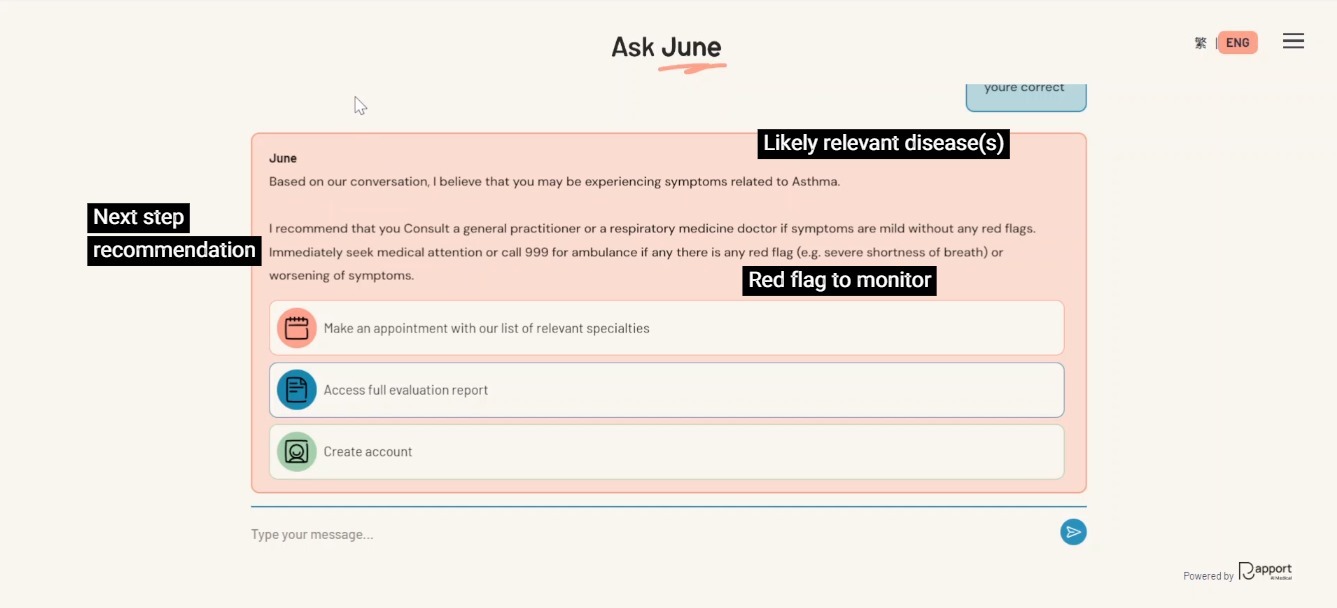

In the healthcare sector, we helped a medical platform revolutionize the patient consultation process with the integration of LLM. We developed a sophisticated chatbot to conduct initial medical consultations, which is able to interact with patients in a humanlike manner, gather information about their symptoms, and provide insights. We used prompt engineering and client’s professional medical knowledge on how a doctor usually makes a diagnosis, ThinkCol aims to ensure the provisional and differential diagnoses are practical and useful for the patients. They can therefore follow up on their situation by visiting the relevant specialist suggested by the chatbot.

We also integrate these models with social platforms to improve user interaction and streamline communication. Our LLMs, connected to APIs, automate and optimize services and operations, while our custom LLM portals provide users with direct access to powerful AI-driven tools, simplifying complex tasks and supporting data-driven decision-making.

Furthermore, ThinkCol harnesses Stable Diffusion models to revolutionize content creation, enabling the automatic generation of visuals from text prompts. This opens up exciting possibilities for creative marketing and personalized media production. Check out our video below to learn more!

Across industries, ThinkCol identifies high-impact GenAI use cases, develops tailored solutions, and seamlessly integrates them into existing business workflows, ensuring smooth digital transformation and sustainable growth. To learn more about our successful LLM case studies, please visit here.