LLMs/ChatGPT for Corporates in Hong Kong or Worldwide

What is the current hype about for LLM/ChatGPT?

ChatGPT is like the real life version of Iron Man’s Jarvis. It possesses the knowledge of the internet, allowing you to ask questions and get answers, create content, get recommendations, and even code!

From a technical perspective, ChatGPT is a Large Language Model (“LLM”) created by Open AI. LLM is a type of natural language processing model that is based on deep learning and trained on massive amounts of text data (basically the entire internet).

Over the past couple of years, companies have been using LLMs to solve typical NLP use cases such as question/answer, chatbot, sentiment analysis, summarization of articles and classification of text.

ChatGPT takes LLMs to the next level by creating a chat version of a LLM that respond with human like answers to many types of questions.

In summary, LLMs is a more general term that describes a class of language models, while ChatGPT is a specific model designed for conversational applications. While ChatGPT is a type of LLMs, not all LLMs are designed for conversational applications like ChatGPT. There are many different types of LLMs other than ChatGPT that developers including us ThinkCol have used such as Claude, Gemini and Llama.

What are some usecases for corporates using LLMs and ChatGPT in Hong Kong?

LLMs, such as ChatGPT, have a wide range of potential use cases for corporates in Hong Kong, including:

Chatbots and Virtual Assistants:

ChatGPT/LLMs can be used to create chatbots and virtual assistants that are much better than previous chatbots. LLMs understands and generates very human-like answers that makes you think you are talking to a human.

Here are some examples:

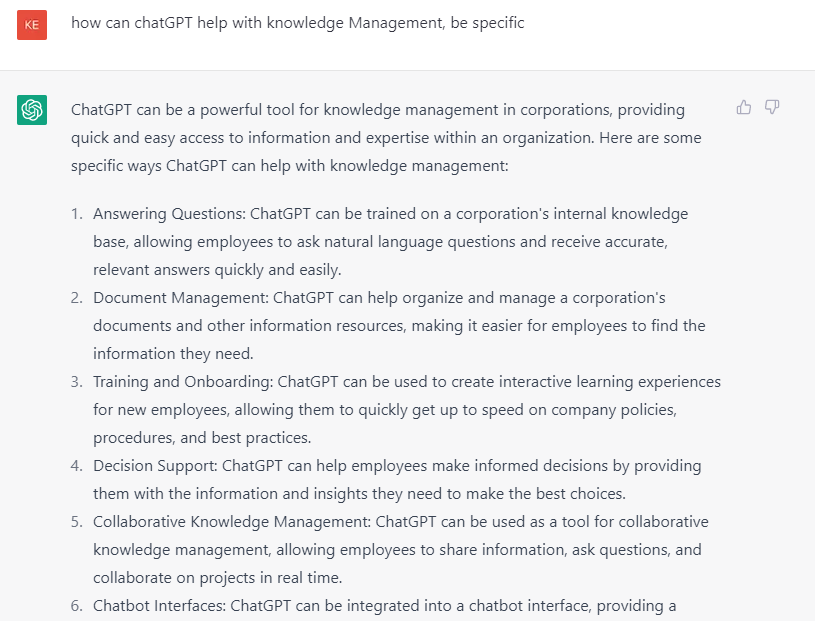

Knowledge Management: This allows ChatGPT/LLMs to answer questions that is specific to a company. By feeding PDFs and Word documents to ChatGPT/LLMs, ChatGPT/LLMs can learn a corporation's internal knowledge base, thereby allowing employees and clients to ask questions and receive accurate answers quickly and easily.

Customer Service: ChatGPT/LLMs can be used to improve customer service by providing intelligent and personalized responses to customer inquiries. By analysing customer messages and providing relevant and accurate responses, ChatGPT/LLMs can help companies provide fast and efficient customer support.

Lead Generation: ChatGPT/LLMs can be used to engage with potential customers and generate leads by acting human-like as well as persuading the client to be interested in the company.

Morning Briefing: ChatGPT/LLMs can be linked to data to allow the ChatGPT/LLM to give different stores a morning briefing advising the store manager on what to improve. This allow the store manager to have a tailormade report each day that advise them on how to improve on their efficiency or other metrics. The manager can then via a chat interface dig deeper into the data powered by the ChatGPT/LLM. This make it seems like each store manager has his/her own personal business analyst!

Content Generation:

ChatGPT/LLMs can be used to quickly generate high-quality content, such as product descriptions, social media posts, and even entire articles. Currently, many digital marketing and content generation companies are leveraging ChatGPT extensively.

Here are some examples:

Blogging: ChatGPT/LLMs can be used to generate ideas for blog posts and even draft entire articles. By providing the AI language model with a topic, it can produce a well-written, informative article that can be used for a company's blog.

Social Media: ChatGPT/LLMs can be used to generate social media posts for platforms like Twitter, LinkedIn, and Facebook. These posts can be tailored to the brand's voice and style, while also incorporating keywords for search engine optimization (SEO).

Email Marketing: ChatGPT/LLMs can be used to generate subject lines and body copy for email marketing campaigns. By providing ChatGPT with information about the audience and campaign objectives, it can create personalized and engaging emails.

Product Descriptions: ChatGPT/LLMs can be used to generate product descriptions that are informative and engaging. This is particularly useful for eCommerce companies that have a large number of products and need to create descriptions quickly.

Writing tenders, proposals and any text related documents: ChatGPT/LLMs can be used as a reference for best practices in writing tenders or proposals. By asking ChatGPT/LLMs for ideas, companies can reduce time spent writing tenders, proposals or text related documents by half. For example if you need to write an internal procedure, you can ask ChatGPT/LLMs what is the best practice for the procedure and reference it to write the rest.

Programming:

ChatGPT/LLMs have also been helping programmers to program faster. As ChatGPT/LLMs been trained on numerous code and programs, it can leverage the best practices to help programmers to do the following:

Code Generation and Code Completion: ChatGPT/LLMs can be used to create code from scratch as well as suggest code completion options. By analysing the code and the programmer's style, ChatGPT/LLMs can suggest the most appropriate options, thereby saving time and reducing errors.

Optimizing code: There are programmers who feed in their code to ChatGPT/LLMs and ask how ChatGPT/LLMs can improve and optimize the code. ChatGPT/LLMs will find new ideas to improve the code and make the entire program more efficient.

Documentation: ChatGPT/LLMs can be used to generate documentation for code libraries, APIs, and other software projects. For example, a programmer can feed ChatGPT a program and ask it to help comment the code or to create a document that explain how to use such code. It will save the programmer a lot of time in creating such documentations.

Project and Knowledge Management:

ChatGPT/LLMs can help with knowledge management in a company. ChatGPT/LLMs has the knowhow in different project management tools and can provide guides and best practices on how best to use them.

Here are some examples:

Documentation: ChatGPT/LLMs can be used to generate documentation, such as meeting notes, project plans, and reports. For example, a user can put in raw meeting notes into ChatGPT and ask it to create a detailed report.

Standard Operating Procedures: If you let ChatGPT/LLMs know what tools you use such as Google Workspaces, Notion or Quickbooks, ChatGPT/LLMs can write out standard operating procedures with a step-by-step guide. This allows the company to keep track of finance, workload, milestones and others.

Above are just a sample of use cases with ChatGPT and LLMs. Overall, LLMs have the potential to enhance a wide range of corporate processes and functions, by improving accuracy, increasing efficiency, and reducing costs.

What are some limitations for ChatGPT/LLMs and problems for corporate use of ChatGPT/LLMs in Hong Kong or Worldwide?

Inability to Reason/ Hallucination: While ChatGPT/LLMs can generate text that appears human-like, they do not actually understand the meaning of the text. This means ChatGPT may generate nonsensical or inaccurate responses if the input is ambiguous or the context is not clear. ChatGPT are known to hallucinate and make up things and pretend it’s true. This will have a negative effect on the client when it cannot trust the contents.

It is therefore crucial to create guardrails to guard the LLM or ChatGPT. At ThinkCol we have created our own guardrails that focuses on detecting prompt injections, politics and out of context answers. We then through different programmatic and LLM methods control the response to ensure there is no reputational lost for our clients.

Limited Context: ChatGPT/LLMs have a limited understanding of context and may not be able to accurately remember previous texts. This can lead to errors or misunderstandings in generating responses, language translation, sentiment analysis, and other tasks. For each different LLM, there will be different token context window. A good developer will try to limit the amount of tokens given to the LLM as APIs usually charge by tokens.

Privacy and Security: ChatGPT is a cloud API that is hosted by Open AI or Azure, so it is not possible to selfhost ChatGPT. Even though the privacy and security of ChatGPT is very high, there are some companies that prefer to keep the LLM on premise. There are opensource models that can be self hosted such as Llama. One additional perk is that finetuning is also possible for opensource models. We at ThinkCol have extensive experience in helping clients to host opensource models and finetune such models.

Fail in simple scenarios: While ChatGPT/LLMs can generate text that appears human-like, they might fail in difficult mathemetic questions. That is because ChatGPT is created to predict the next word, but it does not understand its logic.

Limited to Training Data: LLMs will only understand knowledge that it is being trained on unless it is given outside prompts. In ChatGPT’s case, it is only trained up to a certain cut off point, so it will not respond to answering questions on recent scenarios.

Bias: LLMs may reflect the biases of the data used to train them. If the training data contains biased or discriminatory language, the model may reproduce these biases when generating or analysing text. This is a problem for companies as the LLM might say things that does not reflect the corporate image.

It's important to be aware of these limitations and to use LLMs in combination with human expertise and judgment to ensure the best possible outcomes.

Does ChatGPT/LLMs understand different languages?

Yes, ChatGPT has been trained on a large dataset of text from multiple languages, so it can understand and generate text in many different languages. However, its ability to understand and generate text in a particular language may depend on the amount and quality of training data available in that language.

Some of the languages that ChatGPT is particularly confident on include English, Spanish, French, German, Italian, Portuguese, Chinese, Japanese, Korean, and Arabic, as there is a large amount of high-quality training data available for these languages. However, it can still understand and generate text in many other languages to varying degrees, depending on the availability and quality of training data for those languages.

How can a AI consultancy like ThinkCol help you with ChatGPT/LLMs?

An AI consultancy like ThinkCol can help you with ChatGPT/LLMs in several ways, including:

Identifying Use Cases: An AI consultancy like ThinkCol can help you identify potential use cases for ChatGPT/LLMs within your organization, and determine where these models can be most effective in improving operations, customer experience, or other key metrics. ThinkCol will also guide you on where the limitations are with the ChatGPT/LLMs and what you have to be careful about.

Solving ChatGPT/LLMs Limitations and ethical considerations: As mentioned above, there are a lot of limitations with ChatGPT/LLMs such as data privacy, bias, hallucination, expensive deployment and limited context. However, ThinkCol have already came up with solutions that have solved these problems, which will allow corporations to use ChatGPT/LLMs with confidence.

Integration with Existing Systems: An AI consultancy such as ThinkCol can help you integrate ChatGPT/LLMs with existing systems and processes, such as CRM, ERP, and other enterprise applications. They can also help you develop custom applications and interfaces for ChatGPT/LLMs to make them more accessible and user-friendly for your employees or customers.

Prompt Engineering: Prompt engineering involves designing and crafting high-quality prompts, which are the text inputs given to the ChatGPT/LLMs to generate its responses. Well-crafted prompts can help guide the ChatGPT/LLMs towards generating more accurate and relevant responses, while poorly designed prompts can lead to inaccurate or irrelevant responses. Here at ThinkCol, we have the relevant experience in crafting the right prompts to ensure you leverage the most out of ChatGPT/LLMs.

Guardrails-Controlling LLMs: ThinkCol created our own guardrails to manage the risks associated with deploying LLMs. ThinkCol provides expertise in setting up these controls, ensuring your LLM operates within ethical boundaries and adheres to compliance standards. We assist with blocking prompt injection, hallucinations, out of context responses and develop monitoring systems that flag and address inappropriate outputs, securing both your data and your reputation.

Finetuning: Fine-tuning LLMs is about tailoring the opensource model to your specific needs for your business. At ThinkCol, we streamline this process by preparing targeted datasets, adjusting model parameters, and optimizing performance.

Opensource LLMs: Due to security or privacy concern, some companies prefer opensource LLMs. For example if you have sensitive sales data you might not want to expose it to a cloud API. However deploying LLMs also takes a lot of time and work. We at ThinkCol will help you with deployment whether on premise or on cloud. Our deployment strategies focus on scalability, security, and efficiency, enabling you to benefit from community-driven innovations while maintaining a robust AI infrastructure.

Connecting to Data: Integrating LLMs with your data ecosystems is a critical step in leveraging their full potential. ThinkCol ensures a smooth connection between your LLMs and various data sources, whether they're in the cloud or on-premises. We handle the technicalities of data ingestion, processing, and management, enabling your LLMs to generate insights and add value to your business in real-time. This allows you to have a LLM business analyst at your fingertips at anytime.

Overall, an AI consultancy like ThinkCol can provide expert guidance and support for all aspects of ChatGPT/LLMs development and implementation, helping your organization get the most out of this powerful technology. We have experience in all of the above and are happy to share our experience with you.

How much internal data needs to be given to ChatGPT/LLMs to learn?

If your company wants to build a ChatGPT or LLM catered to your language and to your company’s specific information (i.e. creating a ABCCompanyGPT), there are two ways of doing it.

Fine-tuning

For the first option, you can try to train the existing LLM model on a dataset that is specific to your domain of interest. This helps the model to learn the specific language and terminology related to the domain, and improve its performance on tasks related to that domain. This is possible with opensource LLMs such as Llama but generally is more expensive. However the model will really learn from the data and nuances compared to the prompt engineering option.

Retrieval-Augmented Generation (RAG)

Another option is through prompt engineering and the Retrieval-Augmented Generation (RAG) framework. A LLM can understand a corporate domain by providing it with the context via a vector database and then allowing the LLM to generate the answer based on the context. This can be done through the searching of the right answer at the vector database then providing that result to the LLM via a prompt, which is a specific set of instructions that would make the LLM generate the correct response.

For example, if you want to build an ABCCompanyGPT that answers questions from clients about a particular company, you might provide it with a set of prompts that include information about the company's products, services, history, and policies. You could also include examples of common questions that clients might ask, such as "What are your hours of operation?" or "What is your return policy?".

ThinkCol specializes in using Machine Learning and NLP techniques to find the relevant information based on a question and feed that into a prompt. This allow the LLM to understand the context and answer with relevancy.

We also have experience with more advance use cases where we need to control the response, to connect it to certain data/API or using LLM create multiple chains of logic to come out with the correct solution.

Please also note that there are also a lot of advance tricks for RAG such as chunking, hypothetical questions, context enrichment, hybrid search and query transformation to improve the results of the LLMs. We at ThinkCol are experience with such techniques and are happy to share our experience with you.

How can you access ChatGPT or Claude in Hong Kong?

As of Feb 2024, in Hong Kong, direct access to ChatGPT via OpenAI or Anthropic's web portals is not available, as both companies have region-specific restrictions in place. However, one of the ways residents can still engage with ChatGPT or Claude is through the Poe platform, which serves as an alternative access point for AI-powered conversational models. For those seeking enhanced features, subscriptions via platforms that collaborate with OpenAI, such as Azure OpenAI, offer API access. This method enables developers and businesses in Hong Kong to integrate ChatGPT's advanced capabilities into their own systems, despite the geographical restrictions on direct subscriptions.

How can ThinkCol help you with your ChatGPT and LLM needs?

As mentioned above, ChatGPT/LLMs have a lot of limitations that ThinkCol has expertise to circumvent. ThinkCol has been using GPT and LLMs since 2020, and have been doing NLP projects since 2016. ThinkCol have extensive research into prompts and leveraged different LLMs APIs and Opensource LLMs, to create solutions for different clients. We have multiple use cases where we leverage LLM technology to perform NLP tasks for multiple industries and companies including real estate, FSI, government organizations, retailers and education.

We have the solution and expertise to bypass the limitations of LLM and ChatGPT and to ensure you can get the most out of this new technology.